This article provides an in-depth exploration of A/B testing, from the basics to advanced techniques, with real-world examples and best practices. It’s designed to be comprehensive and informative, making it a valuable resource for anyone looking to implement or improve A/B testing in their organization.

Introduction

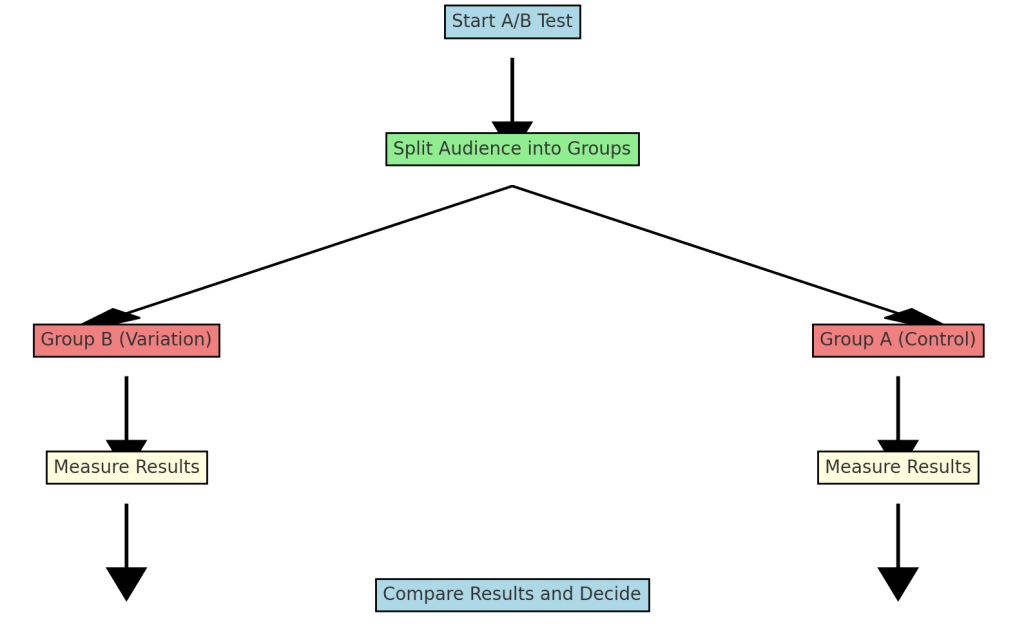

A/B testing, also known as split testing, is a powerful method used to compare two versions of a webpage, app feature, or any other element of a product to determine which performs better. This process is essential in making data-driven decisions that enhance user experiences, optimize conversion rates, and ultimately drive revenue growth. A/B testing is widely used across various industries, including marketing, product management, and UX/UI design, to validate hypotheses and ensure that changes positively impact key performance indicators (KPIs).

Why A/B Testing Matters

In today’s competitive market, making informed decisions based on data rather than assumptions is critical. A/B testing provides a structured approach to testing changes before fully implementing them, reducing risks and ensuring that any updates to a product or service are indeed beneficial.

- Enhancing User Experience: A/B testing allows you to experiment with different designs, content, or features, helping you understand what resonates best with your users.

- Driving Conversion Rates and Revenue: By identifying the most effective strategies through A/B testing, businesses can significantly boost their conversion rates and revenue.

- Reducing Risks: A/B testing minimizes the risk of implementing changes that could negatively impact user experience or business outcomes.

Core Concepts of A/B Testing

Hypothesis Formation

A well-defined hypothesis is the foundation of any A/B test. It should be specific, measurable, and aligned with your business objectives. For example, rather than hypothesizing that “changing the button color will improve conversion rates,” a stronger hypothesis would be, “Changing the call-to-action button from blue to green will increase click-through rates by at least 5%.”

Test Design

- Control vs. Variation: The control is the original version, while the variation is the modified version being tested. Both versions are presented to different segments of users to compare performance.

- Randomization: To ensure that the test results are unbiased, users should be randomly assigned to either the control or the variation group.

- Sample Size Determination: A common mistake in A/B testing is not having a large enough sample size, which can lead to unreliable results. Tools like A/B test calculators can help determine the appropriate sample size needed to achieve statistical significance.

- Metrics Selection: The KPIs chosen should directly reflect the impact of the change being tested. For example, if you are testing a new checkout process, the primary metric might be the checkout completion rate.

Execution Phases

- Planning: Choose the right tools and set up the environment where the test will be conducted. Clearly define your hypothesis, KPIs, and the duration of the test.

- Implementation: Deploy the A/B test using the chosen tool (such as Optimizely or Google Optimize). Ensure that the variations are correctly set up and that users are being tracked accurately.

- Monitoring: Throughout the test, monitor performance to ensure the test is running smoothly. It’s essential to avoid peeking at the results too early, as this can lead to premature conclusions.

- Analysis: Once the test has reached statistical significance, analyze the results. Look for differences in the KPIs between the control and variation. Tools like Google Analytics can be used for deeper analysis.

- Iteration: Based on the results, you might choose to implement the winning variation, refine it further, or conduct additional tests to optimize further.

Best Practices in A/B Testing

Avoiding Common Pitfalls

- Peeking: Stopping the test early because the results look promising is a common mistake. This can lead to incorrect conclusions and implementation of ineffective changes. Always let the test run its course to achieve statistical significance.

- Multiple Comparison Problem: Running multiple tests simultaneously on the same audience can lead to skewed results. Be cautious and ensure tests are independent of each other.

- Adequate Test Duration: Ensure your test runs long enough to account for variations in user behavior across different times and days. Ending a test too soon can result in misleading data.

Segmentation & Personalization

- Audience Segmentation: Segmenting your audience can help you understand how different user groups respond to changes. This allows for more tailored improvements that cater to specific segments.

- Personalization: Personalized A/B tests can be more effective than one-size-fits-all tests. Tailor the variations to different user segments based on their behavior, demographics, or other factors.

Incremental vs. Radical Testing

- Incremental Changes: Small tweaks, such as changing the color of a button or tweaking copy, are easier to implement and less risky. However, the impact may also be smaller.

- Radical Changes: Larger, more significant changes can have a more substantial impact, but they come with higher risk. These should be carefully tested with strong hypotheses to ensure the potential benefits outweigh the risks.

Tools and Technologies

Popular A/B Testing Tools

- Optimizely: Known for its powerful targeting and personalization features, Optimizely is a favorite among marketers and product managers for running complex A/B tests.

- Google Optimize: This free tool integrates seamlessly with Google Analytics, making it a great option for those already using Google’s suite of products.

- VWO (Visual Website Optimizer): VWO is popular for its user-friendly interface and robust testing capabilities, including multivariate testing and heatmaps.

Integrating A/B Testing with Data Analytics

Combining A/B testing with broader data analytics efforts can provide deeper insights. For example, using tools like Google Analytics or Mixpanel alongside your A/B testing tool can help you understand the long-term impact of changes beyond the immediate test results. This integration allows for a more holistic view of user behavior and the effectiveness of the tested changes.

Case Studies

Successful A/B Tests

- Airbnb’s Homepage Redesign: Airbnb used A/B testing to optimize its homepage, leading to a significant increase in user engagement and bookings. By testing various elements, including layout and messaging, they identified the most effective combination to drive conversions.

- Booking.com’s Pricing Strategy: Booking.com frequently uses A/B testing to refine its pricing display and booking process. Through continuous testing, they have optimized the user experience, leading to higher conversion rates and customer satisfaction.

Lessons from Failed A/B Tests

- Twitter’s Retweet Button: Twitter once tested a “Share” button to replace the “Retweet” button. Despite initial positive signs, user feedback and engagement dropped significantly, leading them to revert to the original design. The lesson here is that even seemingly minor changes can have unexpected consequences, and it’s crucial to consider both quantitative data and qualitative user feedback.

Advanced A/B Testing Techniques

Multi-Armed Bandit Testing

Unlike traditional A/B testing, which waits until enough data is collected to make a decision, multi-armed bandit testing dynamically allocates more traffic to the better-performing variation as the test progresses. This method can be more efficient and reduce the opportunity cost of exposing users to a suboptimal version.

Sequential Testing

Sequential testing allows for continuous monitoring of the test results and making decisions at any point without the need to predefine the sample size. This approach can be more flexible and quicker than traditional methods, particularly in fast-paced environments where time is critical.

Bayesian vs. Frequentist Approaches

- Frequentist Approach: This traditional method relies on fixed sample sizes and focuses on whether the observed effect could have occurred by chance. It’s straightforward but can be less flexible.

- Bayesian Approach: The Bayesian method incorporates prior knowledge and updates the probability as more data becomes available. It’s more flexible and can provide more intuitive results, especially in complex testing scenarios.

Conclusion

The Future of A/B Testing

As technology advances, A/B testing will continue to evolve, with trends leaning towards greater personalization, the integration of AI, and real-time data analysis. These developments will make A/B testing more efficient and enable businesses to make even more informed decisions.

Final Thoughts

A/B testing is a critical tool for any organization looking to optimize its products, services, or user experience. By following best practices, avoiding common pitfalls, and continuously iterating on tests, businesses can ensure that they are making the best possible decisions based on reliable data. As the landscape of technology and user expectations continues to change, embracing a culture of experimentation will be key to staying ahead.

References

- Kohavi, R., Longbotham, R., Sommerfield, D., & Henne, R. M. (2009). Controlled experiments on the web: Survey and practical guide. Data Mining and Knowledge Discovery, 18(1), 140-181.

- Eisenberg, B., & Quarto-vonTivadar, J. (2008). Always be testing: The complete guide to Google website optimizer. John Wiley & Sons.

- Ash, T., Page, R., & Ginty, M. (2012). Landing page optimization: The definitive guide to testing and tuning for conversions. John Wiley & Sons.

Leave a Reply